The AI Act has finally overcome its latest hurdle in the European Union’s legislative procedure after its adoption by the European Parliament. However, a void in its final version draws attention to the weakening of obligations aimed at reducing AI’s environmental impacts, despite the technology’s severe impacts on local communities, fauna and flora, both within and beyond European borders, particularly in the Majority World. This commentary investigates the opportunities missed by EU bodies to address such impacts during the AI Act debates.

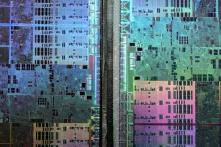

The advancement of artificial intelligence makes more and more headlines worldwide. Many of them highlight hopes about its potential to help combat environmental degradation. At the same time, accounts of the digital industry's negative social and environmental impacts proliferate. Water, energy and minerals are some of the elements necessary to build and maintain the physical and virtual infrastructure that enables digital transformation and the development of AI systems in particular.

These resources are frequently extracted from territories in the Majority World, such as the Democratic Republic of Congo, where mineral extraction is commonly linked to pollution, poor working conditions and child labour; or the Brazilian Amazon, where companies such as Amazon, Google, Microsoft and Apple have benefitted from the illegal, brutal extraction of gold and cassiterite from Indigenous territories, which drives what many have called a genocide of the Yanomami population. Such examples represent how countries in the Majority World often bear most of the brunt of digital technologies' environmental and social impacts. Addressing these concerns requires active measures, including through regulation. But to what extent have regulatory debates been addressing this issue?

A tale of a frustrated progress – the EU AI Act’s earlier steps

The European Union’s AI Act is an interesting case study. Recently approved, it has a comprehensive scope, covering most genres of AI applications. Understanding how it has approached the environmental impacts of AI can thus be helpful in mapping the current state of debates in this field and how other regulations may be influenced.

Since the first draft proposed by the European Commission three years ago, its provisions have changed considerably, especially the ones addressing AI’s sustainability. Back then, reducing AI’s environmental impact was delegated strictly to voluntary codes of conduct.

As the bill advanced in the European Parliament, new provisions on the issue were included. The version adopted by the lawmakers introduced the principle for developing and using AI systems in a sustainable and environmentally friendly manner (Article 4a(f), Parliament’s version). It also specified that high-risk AI systems should have logging capabilities to record energy consumption and measure or calculate resource use, and providers should assess environmental impact throughout the system's lifecycle (Article 12(2a), Parliament’s version).

Article 28b(2)(d) required providers of foundation models to adhere to standards for reducing energy and resource use, improving energy efficiency, and enabling measurement and logging of environmental impact. This provision would apply to the entire life cycle of the model, from the earliest stages of design and development until its deployment and use, thus going much further than the system’s training.

Finally, the Parliament’s version also established that fundamental rights impact assessments for high-risk AI systems should include a measurement of reasonably foreseeable adverse impacts on the environment of putting the system into use (Article 29a(g), Parliament’s version). The wording of this provision also gave room for interpreting that this measurement could encompass the potential environmental impacts of the system during deployment, and not just training.

The AI Act trilogue version’s environmental provisions

The above provisions show that the European Parliament was more committed to environmental protection than the Commission. However, a few months later, during the trilogue negotiations stage of the EU's legislative procedure, representatives of the Council, Commission and Parliament reached an agreement on a text that significantly weakened environment-related provisions, removing most of the abovementioned rules.

The new version provides rules related to standardisation requests, codes of practice and information disclosure, which, although better than nothing, do not seem to respond effectively to the AI industry's significant environmental impacts.

Article 40 establishes that the Commission should request standardisation bodies to provide deliverables ‘on reporting and documentation processes to improve AI systems resource performance’. These should include standards for reducing the consumption of energy and 'other resources consumption’ of the high-risk AI system during its lifecycle – thus encompassing the system’s training and deployment – and on energy-efficient development of general-purpose AI models’.

The provision could potentially reduce AI’s resource demand if well implemented. However, relying on standardisation bodies to provide environmental standards may prove not only time consuming, but also insufficient. As a significant proportion of members of these organisations come from for-profit organisations, incentivising solutions that are less environmentally impactful but increase costs might be a challenge.

The wording of Article 40 is also problematic for its focus on energy expenditure, referring to all other environmental impacts of AI systems as just the consumption of other 'resources’. The Commission can solve such vagueness through specific requests to standardisation bodies, but this will depend on the goodwill of policymakers. If the Commission’s request is not specific or explicit enough, standards may not sufficiently cover impacts beyond energy consumption.

Finally, even if these standards happen to be exemplary in bringing forward solutions that effectively reduce the consumption of resources in these systems, the fact that adherence to standards is mostly voluntary – although a presumption of compliance within the AI Act – and proven through self-assessments that are not mandatorily publicised may weaken positive results.

Beyond standards, the AI Act establishes that providers of general-purpose AI (GPAI) systems – a category that includes generative systems such as ChatGPT – should provide information on the 'known or estimated energy consumption of the model’ (Annex XI).

Such a rule may not prove so effective. First, applying exclusively to GPAI systems – which are indeed high consumers of natural resources, but not the only ones – leaves aside all the rest of the industry. Second, by focusing strictly on energy expenditure, it once again disregards other impacts relating to water and mineral consumption and electronic waste, for instance. Third, the Act does not demand a continuous mapping of resource consumption after making the system available in the market, leaving undocumented all the expenditure that goes beyond the training phase of the system.

Finally, Article 69 establishes that codes of conduct could include measures to assess and minimise AI systems’ environmental impact. However, previous voluntary codes of conduct in the EU have not been much effective in achieving their objectives. An example is the EU’s Disinformation Code of Practice, which has been consistently criticised by scholars for not being successful in tackling harmful content in digital platforms.

What now for regulation?

The EU AI Act’s approach to environmental issues is a missed opportunity to make the AI industry more environmentally sustainable. Stronger national regulations, alongside a concerted international effort tackling multiple fronts, are necessary.

Legislation should be more effective with regard to information disclosure by both developers and deployers of AI systems. Industry agents and governments have consolidated a practice of opacity that overall inhibits access to meaningful information on the environmental impacts of AI. OpenAI, for instance, does not disclose estimates of its water consumption to train or maintain ChatGPT, which inhibits a detailed understanding of its actual impact. In Chile, the opacity around a Google project to build a data centre in Cerrillos was challenged by a local community in the country’s courts, until they were successful in obtaining further information. The activists managed to suspend the construction of the centre through another court ruling. The amount of potable water to be consumed by Google – about 7.6 million litres per day – was outrageous given the severe droughts suffered by the region in the last years.

Access to such data, it is worth mentioning, could help not only community members but also scholars and regulators, who would be more informed to benchmark and establish standards for how much certain kinds of applications should consume regarding materials, energy and water to be operational. Information should encompass both the consumption of resources during the training of the systems and during its deployment, a period in which resource demand continues to escalate.

Regulation could also incentivise the industry to conduct environmental impact assessments. Assessments could help, for instance, to identify how hardware and design choices influence resource consumption, or how the location of facilities or the moment the training occurs affect the environmental footprint based on the region's energy matrix.

International cooperation plays another role. It could, for instance, help intensify the fight against illegal mineral extraction. Supporting Majority World countries that supply raw materials for AI development, including from regions like Latin America, Africa and Southeast Asia, is essential. Cooperation should involve financial and investigative support and ensure that populations affected by this industry actively participate in designing technologies and policies, and benefit from them, including through capacity-building projects. Cooperation could also help render companies accountable for making use of the cross-border trade of illegally extracted minerals to build their products, or for making design choices that are highly ecologically impactful without having social benefits that outweigh such costs. Regulatory interoperability and global cooperation are fundamental for such goals to be effective.

These are just a few possibilities to be considered when tackling the digital industry’s environmental impact. They need to be further developed and complemented by structural changes in the functioning of these markets, which are highly concentrated, both in terms of their relevant players and in the benefits they provide. This includes supporting communities in understanding and shaping the technologies that are most relevant to address the inequalities and injustices that prevail in our societies, especially in the Majority World.

As a final thought, it is key that, when assessing AI’s environmental impacts, one considers the impacts of the digital industry as a whole, from the most basic physical infrastructure to the most complex and abstract systems. That will help avoid challenges in mapping the resource consumption of every single application, which has to be seen as part of a broader, intricate network of technologies. This systemic, full life cycle approach is particularly relevant as the definition of AI gets increasingly blurred by the hype surrounding it.

The views and opinions in this article do not necessarily reflect those of the Heinrich-Böll-Stiftung European Union.